研究了一下如何用pytorch实现CIN的操作。

CIN的数学原理

假设总共有$m$个field,每个field的embedding是一个$D$维向量。

压缩交互网络(Compressed Interaction Network, 简称CIN)隐向量是一个单元对象,因此我们将输入的原特征和神经网络中的隐层都分别组织成一个矩阵,记为$X_0$和$Xk$,CIN中每一层的神经元都是根据前一层的隐层以及原特征向量推算而来,其计算公式如下:

其中,第k层隐层含有$H_k$条神经元向量。$\circ$是Hadamard product,即element-wise product,即,$ \left \langle a_1,a_2,a_3\right \rangle\circ \left \langle b_1,b_2,b_3\right \rangle=\left \langle a_1b_1,a_2b_2,a_3b_3 \right \rangle $.

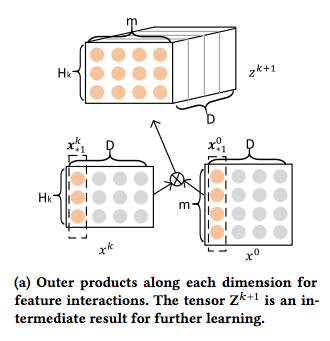

根据前一层隐层的状态$X^k$和原特征矩阵$X^0$,计算出一个中间结果$Z^{k+1}$,它是一个三维的张量。注意图中的$\bigotimes$是outer product,其实就是矩阵乘法咯,也就是一个mx1和一个nx1的向量的外积是一个mxn的矩阵:

而图中的$D$维,其实就是左边的一行和右边的一行对应相乘。

https://daiwk.github.io/posts/dl-dl-ctr-models.html#cin

pytorch的实现

在pytorch的实现过程中主要使用的是torch.einsum操作

https://pytorch.org/docs/stable/torch.html?highlight=einsum#torch.einsum

torch.``einsum(equation, *operands) → Tensor[SOURCE]This function provides a way of computing multilinear expressions (i.e. sums of products) using the Einstein summation convention.

Parameters

equation (string) – The equation is given in terms of lower case letters (indices) to be associated with each dimension of the operands and result. The left hand side lists the operands dimensions, separated by commas. There should be one index letter per tensor dimension. The right hand side follows after -> and gives the indices for the output. If the -> and right hand side are omitted, it implicitly defined as the alphabetically sorted list of all indices appearing exactly once in the left hand side. The indices not apprearing in the output are summed over after multiplying the operands entries. If an index appears several times for the same operand, a diagonal is taken. Ellipses … represent a fixed number of dimensions. If the right hand side is inferred, the ellipsis dimensions are at the beginning of the output.operands (Tensor) – The operands to compute the Einstein sum of.

1 | print(a_tensor) |

简而言之,可以用ik, kj来指代输入矩阵的各个维度,然后用->来定义行列的操作。

CIN实现

1 | a=torch.randn(2,30,8) # (Batch size, feature number, feature dim) |